Artificial intelligence (AI) drives innovation and efficiency in modern computing. Its applications range from data analysis to automating routine tasks.

AI is now the backbone of modern computing. It shapes how businesses and people solve problems and make decisions. AI systems can use algorithms and machine learning. They can process huge amounts of data faster and more accurately than ever.

This skill is vital in an age of exploding data. It underpins industries like healthcare, finance, and e-commerce. AI’s adaptability allows for personalized experiences, enhancing user interaction with technology.

Smart tech is now in everyday devices. It’s more accessible. This has created a more connected world. As AI evolves, it will unlock new potential in computing.

This may lead to innovations that can reshape our future. Explore exclusive quantum tools and software that can accelerate your learning and adoption: Discover Quantum Deals on AppSumo.

The History of the Computer: Who Invented It and When?

The story of the modern computer is one of innovation and technological evolution. From early calculating machines to today’s powerful systems, understanding the computer history helps us appreciate how far technology has come.

But when was the first computer made, and who invented the computer? Let’s explore the fascinating timeline and key contributors behind this revolutionary invention.

When Was the First Computer Invented?

The concept of a programmable machine dates back to the early 19th century. In 1837, Charles Babbage, an English mathematician, designed the Analytical Engine, considered one of the first theoretical computers. Though never completed during his lifetime, it laid the groundwork for future innovations.

Fast forward to the 20th century: the first fully operational computers emerged during World War II. The Zuse Z3, developed by German engineer Konrad Zuse in 1941, is often recognized as the first programmable digital computer.

Shortly after, in 1946, the ENIAC (Electronic Numerical Integrator and Computer) was built in the United States. This massive machine is credited with being the first general-purpose electronic computer.

So, when were computers invented? While the foundational ideas appeared in the 1800s, modern computers began taking shape in the 1940s.

Who Invented the Computer?

Answering who invented the computer depends on how you define “computer.” If we focus on the theoretical basis, Charles Babbage is often credited as the father of the computer. His designs for the Analytical Engine introduced key concepts such as a memory unit and programmable instructions.

For the first operational machine, Konrad Zuse’s Z3 deserves recognition. However, the ENIAC’s creators, John Presper Eckert and John Mauchly, also played a pivotal role in advancing computing technology. Their work marked the beginning of electronic computing as we know it today.

Key Milestones in Computer History

- 1941: Zuse Z3: The first programmable digital computer.

- 1944: Harvard Mark I: An electromechanical computer used during World War II.

- 1946: ENIAC: The first general-purpose electronic computer, capable of complex calculations.

- 1950s: The development of transistors replaced vacuum tubes, making computers smaller and more efficient.

- 1980s: The rise of personal computers, with companies like Apple and IBM leading the market.

Each of these milestones contributed to the history of computers, shaping them into the versatile tools we rely on today.

The Rise of Modern Computing

The world has witnessed an extraordinary transformation in technology. Modern computing now stands at the forefront of innovation. It shapes how we live, work, and interact. From smartphones to smart homes, computing technology integrates into every aspect of our lives.

This shift from simple machines to powerful computers marks a new era in human capability and connectivity.

Evolution From Traditional To Modern Frameworks

The journey from basic computing systems to advanced digital solutions is remarkable. Traditional frameworks relied on manual intervention and were limited in speed and efficiency. In contrast, modern frameworks thrive on automation and sophisticated algorithms. This evolution has paved the way for seamless integration and scalable systems.

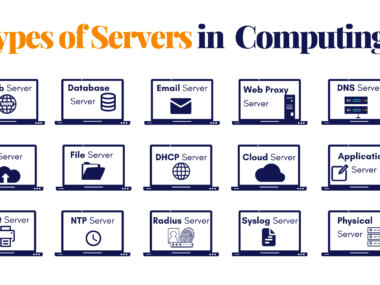

- Mainframes gave way to personal computers.

- Physical servers transitioned to cloud computing.

- Software development moved from waterfall to agile methodologies.

Key Technologies Driving Change

Several groundbreaking technologies have been pivotal in shaping modern computing. Let’s delve into some that stand out:

| Technology | Impact |

|---|---|

| Cloud Computing | Revolutionized data storage and access |

| Artificial Intelligence | Enabled smart decision-making systems |

| Big Data Analytics | Transformed data into actionable insights |

| Internet of Things (IoT) | Connected devices for smarter living |

These technologies have redefined the boundaries of what’s possible, ushering in an age of unprecedented innovation and growth.

Core Technologies Shaping The Industry

The landscape of modern computing constantly evolves, with breakthrough technologies emerging at a remarkable pace. These core technologies are not just buzzwords; they are reshaping industries, redefining user experiences, and setting new standards for personal and professional computing. Let’s delve into the core technologies currently shaping the industry.

Artificial Intelligence & Machine Learning

Artificial intelligence (AI) and machine learning (ML) stand at the forefront of technological innovation. Together, they form the backbone of modern computing, influencing sectors from healthcare to finance. AI and ML enable computers to learn from data, leading to smarter and more autonomous systems. Here are some key points:

- Automated Decision-Making: AI helps in making informed decisions without human intervention.

- Personalization: ML algorithms tailor experiences to individual user preferences.

- Predictive Analytics: Businesses forecast trends and behaviors effectively.

Blockchain And Its Impact

Blockchain technology is a game-changer, known for powering cryptocurrencies like Bitcoin. Its implications, however, extend far beyond digital currencies. With its decentralized and secure ledger system, blockchain is transforming various sectors. Key impacts include:

| Area | Impact |

|---|---|

| Supply Chain | Enhanced traceability and transparency |

| Banking | Secure and efficient transactions |

| Healthcare | Safe storage of patient records |

The Proliferation Of IoT

The Internet of Things (IoT) is connecting everyday devices to the web, creating a network of smart gadgets. This interconnectivity brings convenience and efficiency to our daily lives. IoT’s growth is staggering, with predictions of billions of devices connected in the near future. Key benefits include:

- Home Automation: Control home devices remotely for convenience and energy savings.

- Industrial IoT: Monitor and manage industrial equipment for safety and efficiency.

- Wearable Tech: Track health metrics for a better lifestyle.

Economic Implications Of Advanced Computing

Advanced computing changes the way businesses handle money. It makes things faster and cheaper. Companies can do new things and make more money. This is important for the economy. Explore exclusive quantum tools and software that can accelerate your learning and adoption: Discover Quantum Deals on AppSumo.

Cost Savings And Efficiency Gains

Companies use advanced computing to save money. They get work done quicker with fewer mistakes. This means they spend less on doing the same jobs. Here are some ways they save:

- Automated tasks: Robots and computers do repeat jobs. People can focus on harder work.

- Cloud computing: Businesses use online services instead of buying expensive machines.

- Data analysis: Computers find patterns. Companies understand customers better and waste less.

New Business Models And Revenue Streams

Computers let companies make money in new ways. They offer services online and reach more people. Here are examples:

| Model | Description | Example |

|---|---|---|

| Subscription | Customers pay regularly. | Streaming services |

| Freemium | Basic for free; pay for more. | App upgrades |

| On-demand | Pay for what you use. | Cloud storage |

Advanced computing also helps create new jobs. This helps the economy grow.

Computing Power And Energy Efficiency

Modern computing demands ever-increasing computational power to tackle complex tasks. This growth comes with a significant rise in energy consumption, a challenge for both environmental sustainability and operational costs. Energy-efficient computing is essential for a sustainable future. Let’s explore the challenges and innovations in this critical area. Explore exclusive quantum tools and software that can accelerate your learning and adoption: Discover Quantum Deals on AppSumo.

Challenges Of Increased Energy Demands

Advancements in technology have led to powerful hardware that requires more energy. The digital age pushes the limits of data centers and personal devices alike, resulting in a surge of energy demand. The challenges include:

- Higher operational costs due to increased electricity consumption.

- Environmental impact from non-renewable energy sources.

- Thermal management difficulties in dissipating excess heat.

Innovations In Green Computing

Addressing energy concerns, innovations in green computing aim to reduce the carbon footprint and lower energy bills. These innovations include:

- Energy-efficient processors that provide more power with less energy.

- Advanced cooling systems that minimize energy use for thermal management.

- Renewable energy sources for powering data centers.

Companies are investing in eco-friendly technologies to ensure long-term sustainability and cost-effectiveness.

Cybersecurity In The Age Of Complexity

Cybersecurity in the Age of Complexity stands as a pillar in modern computing. As we dive deeper into the digital world, protecting data becomes crucial. Cyber threats evolve rapidly, making robust security measures essential.

Rising Threats And Vulnerabilities

Today’s digital landscape is a battleground. Hackers grow smarter and their methods more sophisticated. With each technological advance, new weaknesses emerge. Data breaches can lead to massive losses. Users must stay vigilant against phishing, malware, and ransomware attacks. Explore exclusive quantum tools and software that can accelerate your learning and adoption: Discover Quantum Deals on AppSumo.

- Increase in remote work leading to new security challenges

- Growing sophistication of phishing scams

- Rise in ransomware targeting businesses and individuals

- Unpatched software creating entry points for hackers

Advancements In Cybersecurity Technologies

To combat growing threats, technology firms invest heavily in cybersecurity. Innovations like artificial intelligence (AI) and machine learning (ML) are game-changers. They help predict and prevent cyber attacks before they happen.

| Technology | Benefits |

|---|---|

| AI and ML | Automated threat detection and response |

| Blockchain | Enhanced data integrity and security |

| Cloud Security | Flexible, scalable protection for data |

| Zero Trust Architecture | Strict access controls and verification |

Continuous updates and training are vital. Users and businesses must adopt best practices to secure their systems. Staying informed about the latest security trends is key.

Credit: www.atriauniversity.edu.in

The Role Of Data In Decision Making

The role of data in decision-making has become a cornerstone of modern computing. As businesses and organizations generate vast amounts of data, the ability to harness this information for strategic decisions is crucial. Data-driven decision-making leads to more accurate, efficient, and predictive business outcomes.

Big Data Analytics

Big Data Analytics is the powerhouse behind understanding complex patterns. Firms use big data to:

- Spot trends that can improve products and services.

- Predict customer behavior to tailor marketing strategies.

- Improve operations and reduce costs.

By analyzing large datasets, companies gain insights that were once beyond reach.

Real-time Data Processing

Real-time data processing allows businesses to act swiftly. This technology helps:

- Monitor transactions as they happen.

- Respond to customer inquiries instantly.

- Adjust operational procedures on the fly.

With up-to-the-minute data, decision-makers can pivot strategies immediately for better outcomes.

Ethical Considerations And Ai

Artificial intelligence (AI) changes how we live and work. It is important to think about ethics when we create and use AI. Making sure AI is fair and safe is crucial for everyone.

Bias And Fairness In Algorithms

AI must treat everyone equally. Sometimes, AI can be unfair without meaning to be. This happens because of the data it learns from. For example, if an AI only sees pictures of blue cars, it might think all cars are blue. We must check and fix these biases.

- Bias: When AI makes unfair decisions.

- Fairness: Making sure AI treats everyone the same way.

Experts work to make AI fair. They use different tools and tests. These help AI to be more just.

Regulatory And Ethical Frameworks

Rules are important for safe AI use. Many countries have laws to guide AI development. These laws help protect people from harm.

| Country | Framework |

|---|---|

| USA | AI Bill of Rights |

| EU | AI Act |

These frameworks ensure AI respects human rights. They focus on transparency, accountability, and privacy.

- Transparency: knowing how AI works.

- Accountability: Being responsible for AI actions.

- Privacy: protecting personal information.

Credit: steemit.com

Future Outlook: Predictions And Trends

Exploring the future outlook of modern computing reveals exciting predictions and trends. This section dives into what we might expect as technology advances. Explore exclusive quantum tools and software that can accelerate your learning and adoption: Discover Quantum Deals on AppSumo.

Emerging Technologies To Watch

Several technologies are setting the stage for a transformative future in computing:

- Quantum Computing: This technology promises exponential increases in processing power.

- Edge Computing: More devices perform computing tasks locally, reducing latency.

- AI and machine learning will integrate more into everyday apps and services.

These technologies will speed up computing and make it more efficient and widespread. They will change how we interact with devices in our daily lives.

Potential Shifts In Computing Paradigmas

The way we compute is poised for significant changes:

- From Cloud to Edge: Data processing will move closer to where data is collected.

- Privacy-First Design: New regulations will push for enhanced data protection measures.

- Decentralized Networks: Blockchain and similar technologies will encourage more peer-to-peer networks.

These shifts suggest a more personalized, secure, and efficient computing environment is on the horizon.

Keeping an eye on these trends is crucial for anyone involved in technology, from developers to business leaders.

Credit: medium.com

Frequently Asked Questions

What Is The Importance Of Modern Computing?

Modern computing enables efficient data processing, enhances productivity, and drives innovation across various industries. It supports complex problem-solving and facilitates global communication and connectivity, making it essential for economic growth and societal advancement.

Explore exclusive quantum tools and software that can accelerate your learning and adoption: Discover Quantum Deals on AppSumo.

What Is The Role And Importance Of An Operating System In Modern Computing?

An operating system (OS) manages computer hardware and software resources, providing a user-friendly interface for computing tasks. It’s crucial for system stability, efficiency, and security, enabling seamless application execution and multi-tasking capabilities essential for modern computing.

What Is Computing And Its Importance?

Computing involves processing information through computers. It’s essential for problem-solving, data analysis, and enhancing communication in today’s digital world.

What Is The Importance Of Computer System In Today’s Time?

Computers are essential for managing data, enhancing communication, and boosting efficiency in today’s digital era. They support education, enable remote work, and drive technological advancements across industries.

Conclusion

Understanding modern computing is crucial for navigating the digital age. It shapes how we interact, work, and live. By embracing its significance, we equip ourselves for future advancements. Let’s commit to staying informed and adaptable, ensuring our success in an evolving tech landscape.

Keep learning, keep growing. Explore exclusive quantum tools and software that can accelerate your learning and adoption: Discover Quantum Deals on AppSumo.